Back to Research & Publication

Dog Breed Image Identification with CNN

Motivation

Dogs, or nicknamed doggos, puppers, have been human’s best friends and helpers since the beginning of human history. If you are a dog owner, you probably have been asked about your dog’s breed. Each dog breed is not only different in size, appearance, but they also differ in temperament and traits. Sometimes, the dog breed is the final factor to determine whether they can take a certain job (support animal, k9, etc) or be suitable for the owner (hypoallergenic for sensitive owners, good temperament for babies, etc). The American Kennel Club (AKC) currently includes 190 dog breeds in the United States alone and the Fédération Cynologique Internationale (FCI) recognizes more than 360 pure breeds worldwide. However, these figures do not include any mixed breeds or any designer cross breeds such as the popular goldendoodle or puggle breed. The official classification rules can be quite confusing, but you can still find a pure breed’s information after some effort. Mixed breed is a lot trickier, though. For owners who wish to know their mixed dog’s breeds, it often requires extra genetic testing procedure. My question is: can you tell a dog’s breed just by looking at a picture?

Goal

I hope to utilize imaging classification techniques, specifically convolutional neural networks, to help determine the breed of a dog. By uploading several pictures into an imaging classification program, individuals would be able to find out the breed of the dog.

Data Set - Kaggle's Dog Breed Identification Dataset

Kaggle’s data set is free and pre-labeled. It is also a widely used and studied dataset. The Kaggle data set is modified from the Stanford dogs data set, and the original creators are Aditya Khosla, Nityananda Jayadevaprakash, Bangpeng Yao, and Fei-Fei Li.

Exploratory Analysis

The training image set contains 10,222 pictures of 120 different dog breeds. The break down is shown in the following graph:

To understand the size of the images, I also graphed the width and height of the image:

Here are a few more cute images from the dataset:

Data Cleaning

Two important observations made from the above bar plot and scatter plot is that the data set is not balanced, and that the quality of the images are not even. 1. Scottish deerhounds have almost twice as many pictures than eskimo dogs. 2. Images are very different in size and resolution. As these factors may undermine the data training, I decided to use the keras library’s function, ImageGenerator. By inputting the desired sizes/resolution , the image generator will produce the cropped and adjusted images. We can also ask the generator to create more images based on the existing images by shifting the center of the image, zooming in/out, changing the color, reflecting the image over the vertical/horizontal axis, and more. The parameters I use are:

Since color and proportion are significant traits between dog breeds, I decided not to adjust the color or zoom in/out. I also use the 80-20 train and validation set split as shown above.

Model

Layer used: a. Conv2D - A regular neural network node for 2D picture b. MaxPooling2D - Down sampling the picture c. Flatten - Remove other dimensions except for one d. Dense - A deeply connected layer e. Dropout - For regularization With Keras library, one could initialed a CNN model simply by calling: model = Sequential() And add different layers to it by calling: model.add(<layer>) Here is a copy of the final set up:

We can then fit the data by calling fit_generator with the training images, validation images, and training steps.

This design is inspired by the VGG neural network model. I use two Conv2D layers with a MaxPooling layer in between. Then, I added dense layers at the end, with a dropout layer serving as a regularization term.

Result

For each image, I find the probability associated with each breed(see picture above). I then take the column with the maximum probability and match it to their original labels. Using this format, we could select multiple columns with highest/similar probability that potentially associate to a mixed breed dog.

Error Analysis

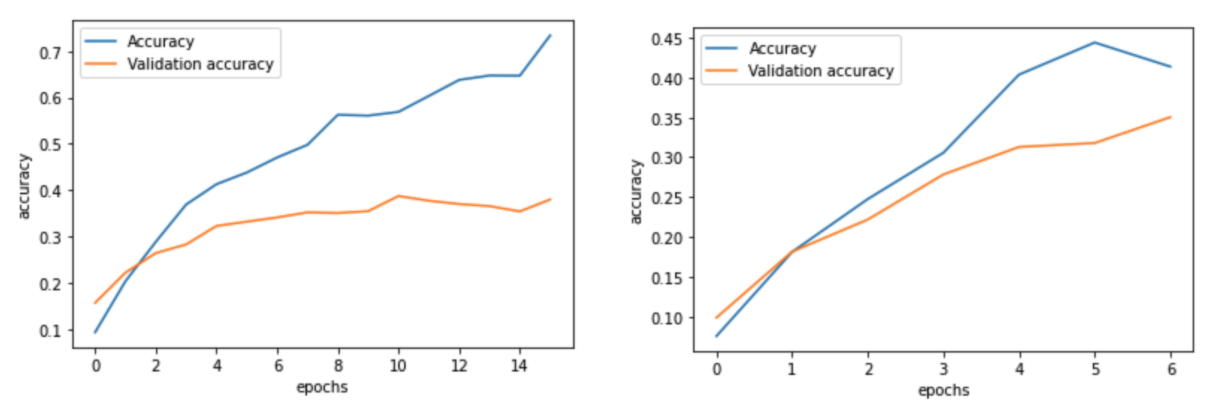

The accuracy is very low, and one reason could be that I had to drastically cut down the training steps (steps per epochs, epochs, validation steps) and number of layers used to prevent my laptop from crashing. The accuracy graph on the left uses 10 epochs and 10 steps per epoch and the right graph contains 30 epochs and 30 steps per epoch (notice the vertical scale is slightly different). Based on the increasing trend as seen on the right graph, I believe it is possible to obtain higher accuracy after training for a longer time, but it would cost huge computational power.

Second Model - Transfer Learning with Inception

Rather than training the model from the start, one could use a pre-trained model such as VGGNET, RESNET, INCEPTION, XCEPTION and pre-trained weight such as “imagenet”. I decided to try InceptionResNetV2 which can be imported from Keras.applications library. Here is a copy of the set up:

It is important to set the base to non-trainable to utilize the pre-trained weight. After adding the layers, compile the model. To prevent overfitting, I also added a callback function that would stop the training if the validation accuracy is not improving.

With my laptop crashing, I moved to google Colab and ran these same codes. Here are the results:

The accuracy is significantly higher than the last model. The accuracy graph on the left uses 10 epochs with 10 steps without call back function and the right graph contains 20 epochs with 20 steps with an early stopping function of patience 4 (the y-axis is slightly different for the graphs). As one could observe, when the accuracy hits its maximum around two on the right, the model stops training after 4 epochs of decrease. Moreover, the validation accuracy is a lot higher than the training accuracy. It indicates the model is struggling to fit the dataset. There are several reasons including the validation and training images are transformed to different degrees. Since I used the same data generator parameters this should not be an issue. There is also a suggestion to train for a longer time, but in the interest of time I decided to simply try another model.

Second Model - Transfer Learning with VGG16

With the same set up as above, this time I imported the VGG16 model instead. Here is the result:

The first model uses 20 steps per epoches and 20 epoches in total. I also continued to use the call back function. Here we see a more overfitting problem, where the training accuracy continues to increase while validation accuracy hits a plateau. Therefore, I re-ran the model with an additional dropout layer between Flatten and Dense for regularization; the result is shown on the right graph. The accuracy is lower than InceptionResNetV2, however. Since the accuracy is still relatively small (less than 70%), it is less meaningful to pursue a model we used here than ResNet.

Limitations

Due to my laptop’s limited computing power, I narrowed the scope into only 20 breeds and used the minimal amount of training steps. Although I moved the code into Google Colab later, the computational time is still very consuming and I was only able to see part of the result. In the future, I would like to train the full dataset, and adapt a new model if necessary.

Conclusion and Future Work

Among all the models used, pre-trained models are relatively simple to create and yield the best result so far. InceptionResNetV2 also gives a better accuracy than VGG16 with the same training steps and set up as described earlier. There are many possible improvements to this method, and I would like to first explore other pre-trained models with various hyperparameters in the future. It is also possible to extract bottleneck features from the pre-train models then fit with logistic regression as shown in the last source, Gaborfodor’s article Dog Breed - Pretrained Keras Models(LB 0.3), which has demonstrated a significant improvement. Overall, I thoroughly enjoy this project, learning and building my first CNN model with the cutest images ever.

Acknowledgment

I would like to thank R&P directors Michael Wang, Nicole Zhu, Aishani Sil for supporting me throughout the project.

Reference

Bilogur, A. (2019, April 2). Boost your CNN image classifier performance with progressive resizing in Keras. https://towardsdatascience.com/boost-your-cnn-image-classifier-performance-with-progressive-resizing-in-keras-a7d96da06e20

Odegua. “Transfer Learning and Image Classification Using Keras on Kaggle Kernels.” Medium, Towards Data Science, 9 May 2020, https:/towardsdatascience.com/transfer-learning-and-image-classification-using-keras-on-kaggle-kernels-c76d3b030649

Paul Monney. “Identify Dog Breed From Image” https://www.kaggle.com/paultimothymooney/identify-dog-breed-from-image

Gaborfodor. “Dog Breed - Pretrained Keras Models(LB 0.3).” https://www.kaggle.com/gaborfodor/dog-breed-pretrained-keras-models-lb-0-3.

Semester

Spring 2020

Researcher

Shiangyi